Gitlab is an open DevOps platform. You can use the SaaS version where there is no technical setup required or you can download, install and maintain your own GitLab self-managed, on your own infrastructure or in the public cloud environment. See the key differences between GitLab SaaS & self-managed

In this blog post we will choose the GitLab self-managed version and deploy the instance using the official Helm chart. This chart is the recommended, and supported method to install GitLab on a cloud native environment.

The

gitlab/gitlabchart is the best way to operate GitLab on Kubernetes. This chart contains all the required components to get started, and can scale to large deployments.

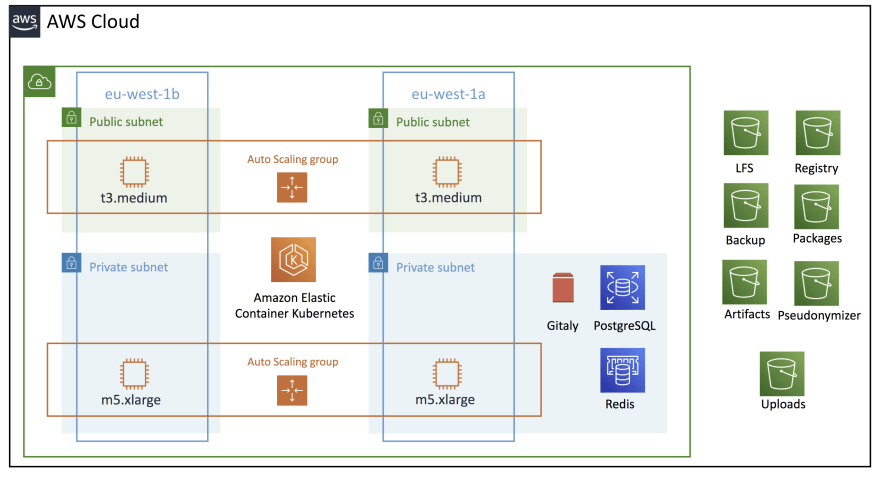

By default, the helm chart deploys all components on Kubernetes. In a cloud native environment, to be production ready, we need to use cloud provided solution to outsource the dependencies.

- PostgreSQL on Amazon RDS,

- Redis on Amazon Elasticache,

- Object storages with Amazon S3,

- Use an external volume for Gitaly.

Prerequisites

- Installing and configuring AWS CLI

- Terraform

- Kubectl

- Helm cli

- Create a public hosted zone in Route 53. See tutorial

- Request a public certificate with AWS Certificate Manager. See tutorial

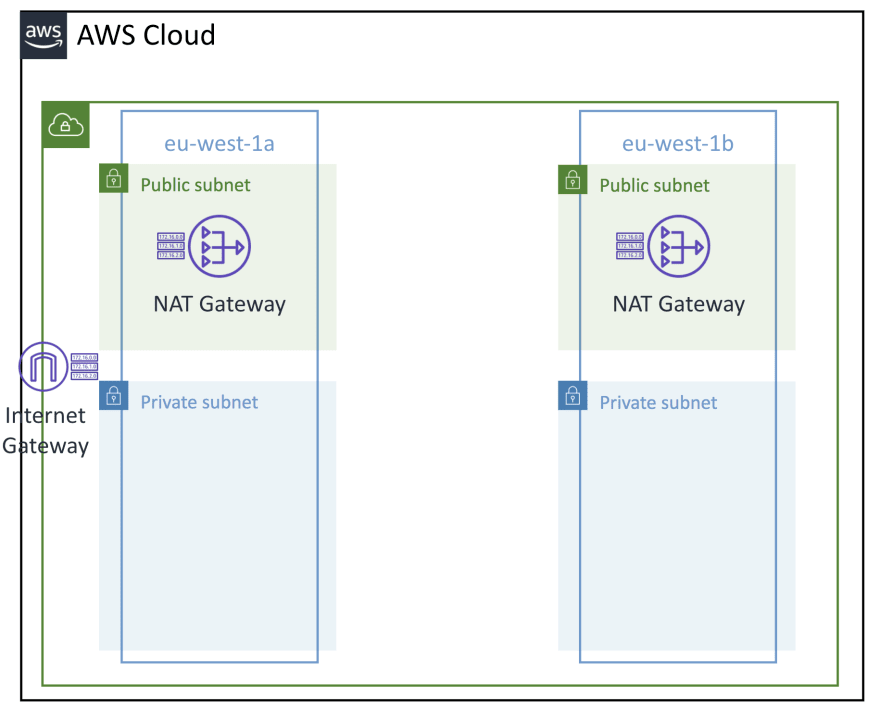

Network

In this section, we create a VPC, 2 private and public subnets, 2 NAT Gateways and an internet gateway.

plan/vpc.tf

resource "aws_vpc" "devops" {

cidr_block = var.vpc_cidr_block

instance_tenancy = "default"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Environment = "core"

Name = "devops"

}

lifecycle {

ignore_changes = [tags]

}

}

resource "aws_default_security_group" "defaul" {

vpc_id = aws_vpc.devops.id

}

plan/subnet.tf

resource "aws_subnet" "private" {

for_each = {

for subnet in local.private_nested_config : subnet.name => subnet

}

vpc_id = aws_vpc.devops.id

cidr_block = each.value.cidr_block

availability_zone = var.az[index(local.private_nested_config, each.value)]

map_public_ip_on_launch = false

tags = {

Environment = "devops"

Name = each.value.name

"kubernetes.io/role/internal-elb" = 1

}

lifecycle {

ignore_changes = [tags]

}

}

resource "aws_subnet" "public" {

for_each = {

for subnet in local.public_nested_config : subnet.name => subnet

}

vpc_id = aws_vpc.devops.id

cidr_block = each.value.cidr_block

availability_zone = var.az[index(local.public_nested_config, each.value)]

map_public_ip_on_launch = true

tags = {

Environment = "devops"

Name = each.value.name

"kubernetes.io/role/elb" = 1

}

lifecycle {

ignore_changes = [tags]

}

}

plan/nat.tf

resource "aws_eip" "nat" {

for_each = {

for subnet in local.public_nested_config : subnet.name => subnet

}

vpc = true

tags = {

Environment = "core"

Name = "eip-${each.value.name}"

}

}

resource "aws_nat_gateway" "nat-gw" {

for_each = {

for subnet in local.public_nested_config : subnet.name => subnet

}

allocation_id = aws_eip.nat[each.value.name].id

subnet_id = aws_subnet.public[each.value.name].id

tags = {

Environment = "core"

Name = "nat-${each.value.name}"

}

}

resource "aws_route_table" "private" {

for_each = {

for subnet in local.public_nested_config : subnet.name => subnet

}

vpc_id = aws_vpc.devops.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.nat-gw[each.value.name].id

}

tags = {

Environment = "core"

Name = "rt-${each.value.name}"

}

}

resource "aws_route_table_association" "private" {

for_each = {

for subnet in local.private_nested_config : subnet.name => subnet

}

subnet_id = aws_subnet.private[each.value.name].id

route_table_id = aws_route_table.private[each.value.associated_public_subnet].id

}

plan/igw.tf

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.devops.id

tags = {

Environment = "core"

Name = "igw-security"

}

}

resource "aws_route_table" "public" {

vpc_id = aws_vpc.devops.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

tags = {

Environment = "core"

Name = "rt-public-security"

}

}

resource "aws_route_table_association" "public" {

for_each = {

for subnet in local.public_nested_config : subnet.name => subnet

}

subnet_id = aws_subnet.public[each.value.name].id

route_table_id = aws_route_table.public.id

}

Amazon EKS

In this section we create our Kubernetes cluster with the following settings:

- restrict access to a specific IP (it could be your office range IPs) and to the NAT gateways IPs (to use gitlab runners)

- enable all logs

- enable IAM roles for service accounts

- security groups for the cluster

plan/eks-cluster.tf

resource "aws_eks_cluster" "devops" {

name = var.eks_cluster_name

role_arn = aws_iam_role.eks.arn

version = "1.18"

vpc_config {

security_group_ids = [aws_security_group.eks_cluster.id]

endpoint_private_access = true

endpoint_public_access = true

public_access_cidrs = concat([var.authorized_source_ranges], [for n in aws_eip.nat : "${n.public_ip}/32"])

subnet_ids = concat([for s in aws_subnet.private : s.id], [for s in aws_subnet.public : s.id])

}

enabled_cluster_log_types = ["api", "audit", "authenticator", "controllerManager", "scheduler"]

# Ensure that IAM Role permissions are created before and deleted after EKS Cluster handling.

# Otherwise, EKS will not be able to properly delete EKS managed EC2 infrastructure such as Security Groups.

depends_on = [

aws_iam_role_policy_attachment.eks-AmazonEKSClusterPolicy,

aws_iam_role_policy_attachment.eks-AmazonEKSVPCResourceController,

aws_iam_role_policy_attachment.eks-AmazonEKSServicePolicy

]

tags = {

Environment = "core"

}

}

resource "aws_iam_role" "eks" {

name = var.eks_cluster_name

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

data "tls_certificate" "cert" {

url = aws_eks_cluster.devops.identity[0].oidc[0].issuer

}

resource "aws_iam_openid_connect_provider" "openid" {

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.cert.certificates[0].sha1_fingerprint]

url = aws_eks_cluster.devops.identity[0].oidc[0].issuer

}

resource "aws_iam_role_policy_attachment" "eks-AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks.name

}

resource "aws_iam_role_policy_attachment" "eks-AmazonEKSServicePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSServicePolicy"

role = aws_iam_role.eks.name

}

# Enable Security Groups for Pods

resource "aws_iam_role_policy_attachment" "eks-AmazonEKSVPCResourceController" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSVPCResourceController"

role = aws_iam_role.eks.name

}

resource "aws_security_group" "eks_cluster" {

name = "${var.eks_cluster_name}/ControlPlaneSecurityGroup"

description = "Communication between the control plane and worker nodegroups"

vpc_id = aws_vpc.devops.id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.eks_cluster_name}/ControlPlaneSecurityGroup"

}

}

resource "aws_security_group_rule" "cluster_inbound" {

description = "Allow unmanaged nodes to communicate with control plane (all ports)"

from_port = 0

protocol = "-1"

security_group_id = aws_eks_cluster.devops.vpc_config[0].cluster_security_group_id

source_security_group_id = aws_security_group.eks_nodes.id

to_port = 0

type = "ingress"

}

Here we create two nodegroups, one private and one public.

plan/eks-nodegroup.tf

resource "aws_eks_node_group" "private" {

cluster_name = aws_eks_cluster.devops.name

node_group_name = "private"

node_role_arn = aws_iam_role.node-group.arn

subnet_ids = [for s in aws_subnet.private : s.id]

labels = {

"type" = "private"

}

instance_types = ["m5.xlarge"]

scaling_config {

desired_size = 2

max_size = 4

min_size = 2

}

# Ensure that IAM Role permissions are created before and deleted after EKS Node Group handling.

# Otherwise, EKS will not be able to properly delete EC2 Instances and Elastic Network Interfaces.

depends_on = [

aws_iam_role_policy_attachment.node-group-AmazonEKSWorkerNodePolicy,

aws_iam_role_policy_attachment.node-group-AmazonEKS_CNI_Policy,

aws_iam_role_policy_attachment.node-group-AmazonEC2ContainerRegistryReadOnly

]

tags = {

Environment = "core"

}

}

resource "aws_eks_node_group" "public" {

cluster_name = aws_eks_cluster.devops.name

node_group_name = "public"

node_role_arn = aws_iam_role.node-group.arn

subnet_ids = [for s in aws_subnet.public : s.id]

labels = {

"type" = "public"

}

instance_types = ["t3.medium"]

scaling_config {

desired_size = 1

max_size = 3

min_size = 1

}

depends_on = [

aws_iam_role_policy_attachment.node-group-AmazonEKSWorkerNodePolicy,

aws_iam_role_policy_attachment.node-group-AmazonEKS_CNI_Policy,

aws_iam_role_policy_attachment.node-group-AmazonEC2ContainerRegistryReadOnly,

]

tags = {

Environment = "core"

}

}

resource "aws_iam_role" "node-group" {

name = "eks-node-group-role-devops"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

resource "aws_iam_role_policy_attachment" "node-group-AmazonEKSWorkerNodePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.node-group.name

}

resource "aws_iam_role_policy_attachment" "node-group-AmazonEKS_CNI_Policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.node-group.name

}

resource "aws_iam_role_policy_attachment" "node-group-AmazonEC2ContainerRegistryReadOnly" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.node-group.name

}

resource "aws_iam_role_policy" "node-group-ClusterAutoscalerPolicy" {

name = "eks-cluster-auto-scaler"

role = aws_iam_role.node-group.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup"

]

Effect = "Allow"

Resource = "*"

},

]

})

}

resource "aws_iam_role_policy" "node-group-AmazonEKS_EBS_CSI_DriverPolicy" {

name = "AmazonEKS_EBS_CSI_Driver_Policy"

role = aws_iam_role.node-group.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"ec2:AttachVolume",

"ec2:CreateSnapshot",

"ec2:CreateTags",

"ec2:CreateVolume",

"ec2:DeleteSnapshot",

"ec2:DeleteTags",

"ec2:DeleteVolume",

"ec2:DescribeAvailabilityZones",

"ec2:DescribeInstances",

"ec2:DescribeSnapshots",

"ec2:DescribeTags",

"ec2:DescribeVolumes",

"ec2:DescribeVolumesModifications",

"ec2:DetachVolume",

"ec2:ModifyVolume"

]

Effect = "Allow"

Resource = "*"

}

]

})

}

resource "aws_iam_role" "ebs-csi-controller" {

name = "AmazonEKS_EBS_CSI_DriverRole"

assume_role_policy = jsonencode({

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": aws_iam_openid_connect_provider.openid.arn

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${replace(aws_iam_openid_connect_provider.openid.url, "https://", "")}:sub": "system:serviceaccount:kube-system:ebs-csi-controller-sa"

}

}

}

]

})

}

resource "aws_security_group" "eks_nodes" {

name = "${var.eks_cluster_name}/ClusterSharedNodeSecurityGroup"

description = "Communication between all nodes in the cluster"

vpc_id = aws_vpc.devops.id

ingress {

from_port = 0

to_port = 0

protocol = "-1"

self = true

}

ingress {

from_port = 0

to_port = 0

protocol = "-1"

security_groups = [aws_eks_cluster.devops.vpc_config[0].cluster_security_group_id]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.eks_cluster_name}/ClusterSharedNodeSecurityGroup"

Environment = "core"

}

}

Gitlab

Let’s start by outsourcing object storages.

External object storage

GitLab relies on object storage for highly-available persistent data in Kubernetes. For production quality deployments, Gitlab recommends using a hosted object storage like Amazon S3. [1]

In the following terraform we outsource:

- Docker registry images,

- Long file storage, artifacts, uploads, packages, external pseudonymizer,

- Backups.

plan/s3.tf

resource "aws_route53_record" "gitlab" {

zone_id = data.aws_route53_zone.public.zone_id

name = "gitlab.${var.public_dns_name}"

type = "CNAME"

ttl = "300"

records = [data.kubernetes_service.gitlab-webservice.status.0.load_balancer.0.ingress.0.hostname]

depends_on = [

helm_release.gitlab,

data.kubernetes_service.gitlab-webservice

]

}

resource "aws_s3_bucket" "gitlab-registry" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-registry"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Registry"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-runner-cache" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-runner-cache"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Runner Cache"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-backups" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-backups"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Backups"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-pseudo" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-pseudo"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Pseudo"

Environment = "core"

}

}

resource "aws_s3_bucket" "git-lfs" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-git-lfs"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Git Large File Storage"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-artifacts" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-artifacts"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Artifacts"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-uploads" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-uploads"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Uploads"

Environment = "core"

}

}

resource "aws_s3_bucket" "gitlab-packages" {

bucket = "${data.aws_caller_identity.current.account_id}-${var.region}-gitlab-packages"

acl = "private"

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

tags = {

Name = "Gitlab Packages"

Environment = "core"

}

}

We used

Server-Side EncryptionwithAmazon S3-Managed Keys (SSE-S3). You can easily replace this encryption byServer-Side EncryptionwithCustomer Master Keys (CMKs)Stored inAWS Key Management Service (SSE-KMS)[2]

IAM permissions

To authorize Gitlab to access the object storages, we need to create an IAM role.

plan/iam.tf

resource "aws_iam_role" "gitlab-access" {

name = "gitlab-access"

assume_role_policy = jsonencode({

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": aws_iam_openid_connect_provider.openid.arn

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${replace(aws_iam_openid_connect_provider.openid.url, "https://", "")}:sub": "system:serviceaccount:gitlab:aws-access"

}

}

}

]

})

}

resource "aws_iam_role_policy" "gitlab-access" {

name = "gitlab-access"

role = aws_iam_role.gitlab-access.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads"

]

Effect = "Allow"

Resource = [

aws_s3_bucket.gitlab-backups.arn,

aws_s3_bucket.gitlab-registry.arn,

aws_s3_bucket.gitlab-runner-cache.arn,

aws_s3_bucket.gitlab-pseudo.arn,

aws_s3_bucket.git-lfs.arn,

aws_s3_bucket.gitlab-artifacts.arn,

aws_s3_bucket.gitlab-uploads.arn,

aws_s3_bucket.gitlab-packages.arn

]

},

{

Action = [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListMultipartUploadParts",

"s3:AbortMultipartUpload"

]

Effect = "Allow"

Resource = [

"${aws_s3_bucket.gitlab-backups.arn}/*",

"${aws_s3_bucket.gitlab-registry.arn}/*",

"${aws_s3_bucket.gitlab-runner-cache.arn}/*",

"${aws_s3_bucket.gitlab-pseudo.arn}/*",

"${aws_s3_bucket.git-lfs.arn}/*",

"${aws_s3_bucket.gitlab-artifacts.arn}/*",

"${aws_s3_bucket.gitlab-uploads.arn}/*",

"${aws_s3_bucket.gitlab-packages.arn}/*"

]

}

]

})

}

External PostgreSQL database

PostgreSQL persists the GitLab database data. We create the RDS instance with two read replicas to load balance the traffic. We restrict incoming network traffic only from private subnets.

plan/rds.tf

resource "random_string" "db_suffix" {

length = 4

special = false

upper = false

}

resource "random_password" "db_password" {

length = 12

special = true

upper = true

}

resource "aws_db_instance" "gitlab-primary" {

# Engine options

engine = "postgres"

engine_version = "12.5"

# Settings

name = "gitlabhq_production"

identifier = "gitlab-primary"

# Credentials Settings

username = "gitlab"

password = "p${random_password.db_password.result}"

# DB instance size

instance_class = "db.m5.xlarge"

# Storage

storage_type = "gp2"

allocated_storage = 100

max_allocated_storage = 2000

# Availability & durability

multi_az = true

# Connectivity

db_subnet_group_name = aws_db_subnet_group.sg.id

publicly_accessible = false

vpc_security_group_ids = [aws_security_group.sg.id]

port = var.rds_port

# Database authentication

iam_database_authentication_enabled = false

# Additional configuration

parameter_group_name = "default.postgres12"

# Backup

backup_retention_period = 14

backup_window = "03:00-04:00"

final_snapshot_identifier = "gitlab-postgresql-final-snapshot-${random_string.db_suffix.result}"

delete_automated_backups = true

skip_final_snapshot = false

# Encryption

storage_encrypted = true

# Maintenance

auto_minor_version_upgrade = true

maintenance_window = "Sat:00:00-Sat:02:00"

# Deletion protection

deletion_protection = false

tags = {

Environment = "core"

}

}

resource "aws_db_instance" "gitlab-replica" {

count = 2

# Engine options

engine = "postgres"

engine_version = "12.5"

# Settings

name = "gitlabhq_production"

identifier = "gitlab-replica-${count.index}"

replicate_source_db = "gitlab-primary"

skip_final_snapshot = true

final_snapshot_identifier = null

# DB instance size

instance_class = "db.m5.xlarge"

# Storage

storage_type = "gp2"

allocated_storage = 100

max_allocated_storage = 2000

publicly_accessible = false

vpc_security_group_ids = [aws_security_group.sg.id]

port = var.rds_port

# Database authentication

iam_database_authentication_enabled = false

# Additional configuration

parameter_group_name = "default.postgres12"

# Encryption

storage_encrypted = true

# Deletion protection

deletion_protection = false

tags = {

Environment = "core"

}

depends_on = [

aws_db_instance.gitlab-primary

]

}

resource "aws_db_subnet_group" "sg" {

name = "gitlab"

subnet_ids = [for s in aws_subnet.private : s.id]

tags = {

Environment = "core"

Name = "gitlab"

}

}

resource "aws_security_group" "sg" {

name = "gitlab"

description = "Allow inbound/outbound traffic"

vpc_id = aws_vpc.devops.id

ingress {

from_port = var.rds_port

to_port = var.rds_port

protocol = "tcp"

cidr_blocks = [for s in aws_subnet.private : s.cidr_block]

}

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = [for s in aws_subnet.private : s.cidr_block]

}

tags = {

Environment = "core"

Name = "gitlab"

}

}

Also here, you can encrypt the RDS instance with Amazon KMS [3]

External Redis

Redis persists GitLab job data. We use Amazon Elasticache to create the redis cluster. Only EKS worker nodegroups are authorized to access the redis nodes.

plan/elasticache.tf

resource "aws_elasticache_cluster" "gitlab" {

cluster_id = "cluster-gitlab"

engine = "redis"

node_type = "cache.m4.xlarge"

num_cache_nodes = 1

parameter_group_name = "default.redis3.2"

engine_version = "3.2.10"

port = 6379

subnet_group_name = aws_elasticache_subnet_group.gitlab.name

security_group_ids = [aws_security_group.redis.id]

}

resource "aws_elasticache_subnet_group" "gitlab" {

name = "gitlab-cache-subnet"

subnet_ids = [for s in aws_subnet.private : s.id]

}

resource "aws_security_group" "redis" {

name = "ElasticacheRedisSecurityGroup"

description = "Communication between the redis and eks worker nodegroups"

vpc_id = aws_vpc.devops.id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "ElasticacheRedisSecurityGroup"

}

}

resource "aws_security_group_rule" "redis_inbound" {

description = "Allow eks nodes to communicate with Redis"

from_port = 6379

protocol = "tcp"

security_group_id = aws_security_group.redis.id

source_security_group_id = aws_eks_cluster.devops.vpc_config[0].cluster_security_group_id

to_port = 6379

type = "ingress"

}

External persistent volume

Gitaly persists the Git repositories and requires persistent storage, configured through persistent volumes that specify which disks the cluster has access to.

Gitlab currently recommends using manual provisioning of persistent volumes. Amazon EKS clusters default to spanning multiple zones. Dynamic provisioning, if not configured to use a storage class locked to a particular zone leads to a scenario where pods may exist in a different zone from storage volumes and be unable to access data. [5]

plan/ebs.tf

resource "aws_ebs_volume" "gitaly" {

availability_zone = var.az[0]

size = 200

type = "gp2"

tags = {

Name = "gitaly"

}

}

Also here, you can encrypt the EBS resource with Amazon KMS [4]

We have completed creating the necessary AWS resources for Gitlab. We are now starting to create the Kubernetes resources.

Kubernetes resources

In this section we create:

gitlabnamespace,aws-accessservice account needed to access the S3 object storages,gitlab-postgressecret to store the db password,s3-storage-credentialsands3-registry-storage-credentialssecrets to access the S3 object storages,shell-secretfor gitlab shell,- The storage class, persistent volume and the persistent volume claim used by gitaly.

plan/k8s.tf

esource "kubernetes_namespace" "gitlab" {

metadata {

name = "gitlab"

}

}

resource "kubernetes_service_account" "gitlab" {

metadata {

name = "aws-access"

namespace = "gitlab"

labels = {

"app.kubernetes.io/name" = "aws-access"

}

annotations = {

"eks.amazonaws.com/role-arn" = aws_iam_role.gitlab-access.arn

}

}

}

resource "kubernetes_secret" "gitlab-postgres" {

metadata {

name = "gitlab-postgres"

namespace = "gitlab"

}

data = {

psql-password = "p${random_password.db_password.result}"

}

}

resource "kubernetes_secret" "s3-storage-credentials" {

metadata {

name = "s3-storage-credentials"

namespace = "gitlab"

}

data = {

connection = data.template_file.rails-s3-yaml.rendered

}

}

data "template_file" "rails-s3-yaml" {

template = <<EOF

provider: AWS

region: ${var.region}

EOF

}

resource "kubernetes_secret" "s3-registry-storage-credentials" {

metadata {

name = "s3-registry-storage-credentials"

namespace = "gitlab"

}

data = {

config = data.template_file.registry-s3-yaml.rendered

}

}

data "template_file" "registry-s3-yaml" {

template = <<EOF

s3:

bucket: ${aws_s3_bucket.gitlab-registry.id}

region: ${var.region}

v4auth: true

EOF

}

resource "random_password" "shell-secret" {

length = 12

special = true

upper = true

}

resource "kubernetes_secret" "shell-secret" {

metadata {

name = "shell-secret"

namespace = "gitlab"

}

data = {

password = random_password.shell-secret.result

}

}

resource "kubernetes_persistent_volume" "gitaly" {

metadata {

name = "gitaly-pv"

}

spec {

capacity = {

storage = "200Gi"

}

access_modes = ["ReadWriteOnce"]

storage_class_name = "ebs-gp2"

persistent_volume_source {

aws_elastic_block_store {

fs_type = "ext4"

volume_id = aws_ebs_volume.gitaly.id

}

}

}

}

resource "kubernetes_persistent_volume_claim" "gitaly" {

metadata {

name = "repo-data-gitlab-gitaly-0"

namespace = "gitlab"

}

spec {

access_modes = ["ReadWriteOnce"]

storage_class_name = "ebs-gp2"

resources {

requests = {

storage = "200Gi"

}

}

volume_name = kubernetes_persistent_volume.gitaly.metadata.0.name

}

}

resource "kubernetes_storage_class" "gitaly" {

metadata {

name = "ebs-gp2"

}

storage_provisioner = "kubernetes.io/aws-ebs"

reclaim_policy = "Retain"

parameters = {

type = "gp2"

}

allowed_topologies {

match_label_expressions {

key = "failure-domain.beta.kubernetes.io/zone"

values = var.az

}

}

}

Helm

We finish by configuring the helm chart.

plan/gitlab.tf

data "template_file" "gitlab-values" {

template = <<EOF

# Values for gitlab/gitlab chart on EKS

global:

serviceAccount:

enabled: true

create: false

name: aws-access

platform:

eksRoleArn: ${aws_iam_role.gitlab-access.arn}

nodeSelector:

eks.amazonaws.com/nodegroup: private

shell:

authToken:

secret: ${kubernetes_secret.shell-secret.metadata.0.name}

key: password

edition: ce

hosts:

domain: ${var.public_dns_name}

https: true

gitlab:

name: gitlab.${var.public_dns_name}

https: true

ssh: ~

## doc/charts/globals.md#configure-ingress-settings

ingress:

tls:

enabled: false

## doc/charts/globals.md#configure-postgresql-settings

psql:

password:

secret: ${kubernetes_secret.gitlab-postgres.metadata.0.name}

key: psql-password

host: ${aws_db_instance.gitlab-primary.address}

port: ${var.rds_port}

username: gitlab

database: gitlabhq_production

load_balancing:

hosts:

- ${aws_db_instance.gitlab-replica[0].address}

- ${aws_db_instance.gitlab-replica[1].address}

redis:

password:

enabled: false

host: ${aws_elasticache_cluster.gitlab.cache_nodes[0].address}

## doc/charts/globals.md#configure-minio-settings

minio:

enabled: false

## doc/charts/globals.md#configure-appconfig-settings

## Rails based portions of this chart share many settings

appConfig:

## doc/charts/globals.md#general-application-settings

enableUsagePing: false

## doc/charts/globals.md#lfs-artifacts-uploads-packages

backups:

bucket: ${aws_s3_bucket.gitlab-backups.id}

lfs:

bucket: ${aws_s3_bucket.git-lfs.id}

connection:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

artifacts:

bucket: ${aws_s3_bucket.gitlab-artifacts.id}

connection:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

uploads:

bucket: ${aws_s3_bucket.gitlab-uploads.id}

connection:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

packages:

bucket: ${aws_s3_bucket.gitlab-packages.id}

connection:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

## doc/charts/globals.md#pseudonymizer-settings

pseudonymizer:

bucket: ${aws_s3_bucket.gitlab-pseudo.id}

connection:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

nginx-ingress:

controller:

config:

use-forwarded-headers: "true"

service:

annotations:

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http

service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "3600"

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: ${var.acm_gitlab_arn}

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: https

targetPorts:

https: http # the ELB will send HTTP to 443

certmanager-issuer:

email: ${var.certmanager_issuer_email}

prometheus:

install: false

redis:

install: false

# https://docs.gitlab.com/ee/ci/runners/#configuring-runners-in-gitlab

gitlab-runner:

install: false

gitlab:

gitaly:

persistence:

volumeName: ${kubernetes_persistent_volume_claim.gitaly.metadata.0.name}

nodeSelector:

topology.kubernetes.io/zone: ${var.az[0]}

task-runner:

backups:

objectStorage:

backend: s3

config:

secret: ${kubernetes_secret.s3-storage-credentials.metadata.0.name}

key: connection

annotations:

eks.amazonaws.com/role-arn: ${aws_iam_role.gitlab-access.arn}

webservice:

annotations:

eks.amazonaws.com/role-arn: ${aws_iam_role.gitlab-access.arn}

sidekiq:

annotations:

eks.amazonaws.com/role-arn: ${aws_iam_role.gitlab-access.arn}

migrations:

# Migrations pod must point directly to PostgreSQL primary

psql:

password:

secret: ${kubernetes_secret.gitlab-postgres.metadata.0.name}

key: psql-password

host: ${aws_db_instance.gitlab-primary.address}

port: ${var.rds_port}

postgresql:

install: false

gitlab-runner:

install: true

rbac:

create: true

runners:

locked: false

registry:

enabled: true

annotations:

eks.amazonaws.com/role-arn: aws_iam_role.gitlab-access.arn

storage:

secret: ${kubernetes_secret.s3-registry-storage-credentials.metadata.0.name}

key: config

EOF

}

resource "helm_release" "gitlab" {

name = "gitlab"

namespace = "gitlab"

timeout = 600

chart = "gitlab/gitlab"

version = "4.11.3"

values = [data.template_file.gitlab-values.rendered]

depends_on = [

aws_eks_node_group.private,

aws_eks_node_group.public,

aws_db_instance.gitlab-primary,

aws_db_instance.gitlab-replica,

aws_elasticache_cluster.gitlab,

aws_iam_role_policy.gitlab-access,

kubernetes_namespace.gitlab,

kubernetes_secret.gitlab-postgres,

kubernetes_secret.s3-storage-credentials,

kubernetes_secret.s3-registry-storage-credentials,

kubernetes_persistent_volume_claim.gitaly

]

}

data "kubernetes_service" "gitlab-webservice" {

metadata {

name = "gitlab-nginx-ingress-controller"

namespace = "gitlab"

}

depends_on = [

helm_release.gitlab

]

}

resource "aws_route53_record" "gitlab" {

zone_id = data.aws_route53_zone.public.zone_id

name = "gitlab.${var.public_dns_name}"

type = "CNAME"

ttl = "300"

records = [data.kubernetes_service.gitlab-webservice.status.0.load_balancer.0.ingress.0.hostname]

depends_on = [

helm_release.gitlab,

data.kubernetes_service.gitlab-webservice

]

}

Deployment

We’ve finished creating our terraform files, let’s get ready for deployment!

plan/main.tf

data "aws_caller_identity" "current" {}

data "aws_route53_zone" "public" {

name = "${var.public_dns_name}."

}

plan/output.tf

output "eks-endpoint" {

value = aws_eks_cluster.devops.endpoint

}

output "kubeconfig-certificate-authority-data" {

value = aws_eks_cluster.devops.certificate_authority[0].data

}

output "eks_issuer_url" {

value = aws_iam_openid_connect_provider.openid.url

}

output "nat1_ip" {

value = aws_eip.nat["public-devops-1"].public_ip

}

output "nat2_ip" {

value = aws_eip.nat["public-devops-2"].public_ip

}

plan/variables.tf

variable "region" {

type = string

}

variable "az" {

type = list(string)

default = ["eu-west-1a", "eu-west-1b"]

}

variable "certmanager_issuer_email" {

type = string

}

variable "vpc_cidr_block" {

type = string

}

variable "eks_cluster_name" {

type = string

default = "devops"

}

variable "rds_port" {

type = number

default = 5432

}

variable "acm_gitlab_arn" {

type = string

}

variable "private_network_config" {

type = map(object({

cidr_block = string

associated_public_subnet = string

}))

default = {

"private-devops-1" = {

cidr_block = "10.0.0.0/23"

associated_public_subnet = "public-devops-1"

},

"private-devops-2" = {

cidr_block = "10.0.2.0/23"

associated_public_subnet = "public-devops-2"

}

}

}

locals {

private_nested_config = flatten([

for name, config in var.private_network_config : [

{

name = name

cidr_block = config.cidr_block

associated_public_subnet = config.associated_public_subnet

}

]

])

}

variable "public_network_config" {

type = map(object({

cidr_block = string

}))

default = {

"public-devops-1" = {

cidr_block = "10.0.8.0/23"

},

"public-devops-2" = {

cidr_block = "10.0.10.0/23"

}

}

}

locals {

public_nested_config = flatten([

for name, config in var.public_network_config : [

{

name = name

cidr_block = config.cidr_block

}

]

])

}

variable "public_dns_name" {

type = string

}

variable "authorized_source_ranges" {

type = string

description = "Addresses or CIDR blocks which are allowed to connect to the Gitlab IP address. The default behavior is to allow anyone (0.0.0.0/0) access. You should restrict access to external IPs that need to access the Gitlab cluster."

default = "0.0.0.0/0"

}

plan/backend.tf

terraform {

backend "s3" {

}

}

plan/versions.tf

terraform {

required_version = ">= 0.13"

}

plan/provider.tf

provider "aws" {

region = var.region

}

provider "kubernetes" {

host = aws_eks_cluster.devops.endpoint

cluster_ca_certificate = base64decode(

aws_eks_cluster.devops.certificate_authority[0].data

)

exec {

api_version = "client.authentication.k8s.io/v1alpha1"

args = ["eks", "get-token", "--cluster-name", var.eks_cluster_name]

command = "aws"

}

}

provider "helm" {

kubernetes {

host = aws_eks_cluster.devops.endpoint

cluster_ca_certificate = base64decode(

aws_eks_cluster.devops.certificate_authority[0].data

)

exec {

api_version = "client.authentication.k8s.io/v1alpha1"

args = ["eks", "get-token", "--cluster-name", var.eks_cluster_name]

command = "aws"

}

}

}

plan/terraform.tfvars

az = ["<AWS_REGION>a", "<AWS_REGION>b", "<AWS_REGION>c"]

region = "<AWS_REGION>"

acm_gitlab_arn = "<ACM_GITLAB_ARN>"

vpc_cidr_block = "10.0.0.0/16"

public_dns_name = "<PUBLIC_DNS_NAME>"

authorized_source_ranges = "<LOCAL_IP_RANGES>"

certmanager_issuer_email = "<CERTMANAGER_ISSUER_EMAIL>"

Initialize AWS devops infrastructure. The states will be saved in AWS.

export AWS_PROFILE=<MY_PROFILE>

export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

export AWS_REGION=eu-west-1

export EKS_CLUSTER_NAME="devops"

export R53_HOSTED_ZONE_ID=<R53_HOSTED_ZONE_ID>

export ACM_GITLAB_ARN=<ACM_GITLAB_ARN>

export CERTMANAGER_ISSUER_EMAIL=<CERTMANAGER_ISSUER_EMAIL>

export PUBLIC_DNS_NAME=<PUBLIC_DNS_NAME>

export TERRAFORM_BUCKET_NAME=bucket-${AWS_ACCOUNT_ID}-${AWS_REGION}-terraform-backend

# Create bucket

aws s3api create-bucket \

--bucket $TERRAFORM_BUCKET_NAME \

--region $AWS_REGION \

--create-bucket-configuration LocationConstraint=$AWS_REGION

# Make it not public

aws s3api put-public-access-block \

--bucket $TERRAFORM_BUCKET_NAME \

--public-access-block-configuration "BlockPublicAcls=true,IgnorePublicAcls=true,BlockPublicPolicy=true,RestrictPublicBuckets=true"

# Enable versioning

aws s3api put-bucket-versioning \

--bucket $TERRAFORM_BUCKET_NAME \

--versioning-configuration Status=Enabled

cd plan

terraform init \

-backend-config="bucket=$TERRAFORM_BUCKET_NAME" \

-backend-config="key=devops/gitlab/terraform-state" \

-backend-config="region=$AWS_REGION"

Complete plan/terraform.tfvars and run

sed -i "s/<LOCAL_IP_RANGES>/$(curl -s http://checkip.amazonaws.com/)\/32/g; s/<PUBLIC_DNS_NAME>/${PUBLIC_DNS_NAME}/g; s/<AWS_ACCOUNT_ID>/${AWS_ACCOUNT_ID}/g; s/<AWS_REGION>/${AWS_REGION}/g; s/<EKS_CLUSTER_NAME>/${EKS_CLUSTER_NAME}/g; s,<ACM_GITLAB_ARN>,${ACM_GITLAB_ARN},g; s/<CERTMANAGER_ISSUER_EMAIL>/${CERTMANAGER_ISSUER_EMAIL}/g;" terraform.tfvars

terraform apply

Access the EKS Cluster using

aws eks --region $AWS_REGION update-kubeconfig --name $EKS_CLUSTER_NAME

To deploy the Amazon EBS CSI driver, run one of the following commands based on your AWS Region:

kubectl apply -k "github.com/kubernetes-sigs/aws-ebs-csi-driver/deploy/kubernetes/overlays/stable/?ref=master"

Annotate the ebs-csi-controller-sa Kubernetes service account with the ARN of the IAM role that you created in terraform:

kubectl annotate serviceaccount ebs-csi-controller-sa \

-n kube-system \

eks.amazonaws.com/role-arn=arn:aws:iam::$AWS_ACCOUNT_ID:role/AmazonEKS_EBS_CSI_DriverRole

Delete the driver pods:

kubectl delete pods \

-n kube-system \

-l=app=ebs-csi-controller

Note: The driver pods are automatically redeployed with the IAM permissions from the IAM policy assigned to the role. For more information, see Amazon EBS CSI driver.

Check if all resources have been created properly:

$ kubectl config set-context --current --namespace=gitlab

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/gitlab-cainjector-67dbdcc896-trv82 1/1 Running 0 12m

pod/gitlab-cert-manager-69bd6d746f-gllsw 1/1 Running 0 12m

pod/gitlab-gitaly-0 1/1 Running 0 12m

pod/gitlab-gitlab-exporter-7f84659548-pdgg7 1/1 Running 0 12m

pod/gitlab-gitlab-runner-78f9779cc5-ln8jv 1/1 Running 3 12m

pod/gitlab-gitlab-shell-79587877cf-k9cms 1/1 Running 0 12m

pod/gitlab-gitlab-shell-79587877cf-sp7qd 1/1 Running 0 12m

pod/gitlab-issuer-1-kmmlf 0/1 Completed 0 12m

pod/gitlab-migrations-1-kzwbw 0/1 Completed 0 12m

pod/gitlab-nginx-ingress-controller-d6cfd66cb-pwqw9 1/1 Running 0 12m

pod/gitlab-nginx-ingress-controller-d6cfd66cb-q2vqs 1/1 Running 0 12m

pod/gitlab-nginx-ingress-default-backend-658cc89589-tbn4f 1/1 Running 0 12m

pod/gitlab-registry-86d9c8f9cd-m6f9b 1/1 Running 0 12m

pod/gitlab-registry-86d9c8f9cd-t99dn 1/1 Running 0 12m

pod/gitlab-sidekiq-all-in-1-v1-5c5dcd6dbb-k9mpr 1/1 Running 0 12m

pod/gitlab-task-runner-5dff588987-z59k8 1/1 Running 0 12m

pod/gitlab-webservice-default-79dc48bcd4-8zc7t 2/2 Running 0 12m

pod/gitlab-webservice-default-79dc48bcd4-lq2j9 2/2 Running 0 12m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gitlab-cert-manager ClusterIP 172.20.21.34 <none> 9402/TCP 12m

service/gitlab-gitaly ClusterIP None <none> 8075/TCP,9236/TCP 12m

service/gitlab-gitlab-exporter ClusterIP 172.20.183.92 <none> 9168/TCP 12m

service/gitlab-gitlab-shell ClusterIP 172.20.207.85 <none> 22/TCP 12m

service/gitlab-nginx-ingress-controller LoadBalancer 172.20.113.153 ae030366025b247398e8230174fbc4d3-1830148250.eu-west-1.elb.amazonaws.com 80:30698/TCP,443:31788/TCP,22:32155/TCP 12m

service/gitlab-nginx-ingress-controller-metrics ClusterIP 172.20.82.7 <none> 9913/TCP 12m

service/gitlab-nginx-ingress-default-backend ClusterIP 172.20.166.82 <none> 80/TCP 12m

service/gitlab-registry ClusterIP 172.20.47.110 <none> 5000/TCP 12m

service/gitlab-webservice-default ClusterIP 172.20.70.7 <none> 8080/TCP,8181/TCP 12m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/gitlab-cainjector 1/1 1 1 13m

deployment.apps/gitlab-cert-manager 1/1 1 1 13m

deployment.apps/gitlab-gitlab-exporter 1/1 1 1 13m

deployment.apps/gitlab-gitlab-runner 1/1 1 1 13m

deployment.apps/gitlab-gitlab-shell 2/2 2 2 13m

deployment.apps/gitlab-nginx-ingress-controller 2/2 2 2 13m

deployment.apps/gitlab-nginx-ingress-default-backend 1/1 1 1 13m

deployment.apps/gitlab-registry 2/2 2 2 13m

deployment.apps/gitlab-sidekiq-all-in-1-v1 1/1 1 1 13m

deployment.apps/gitlab-task-runner 1/1 1 1 13m

deployment.apps/gitlab-webservice-default 2/2 2 2 13m

NAME DESIRED CURRENT READY AGE

replicaset.apps/gitlab-cainjector-67dbdcc896 1 1 1 13m

replicaset.apps/gitlab-cert-manager-69bd6d746f 1 1 1 13m

replicaset.apps/gitlab-gitlab-exporter-7f84659548 1 1 1 13m

replicaset.apps/gitlab-gitlab-runner-78f9779cc5 1 1 1 13m

replicaset.apps/gitlab-gitlab-shell-79587877cf 2 2 2 13m

replicaset.apps/gitlab-nginx-ingress-controller-d6cfd66cb 2 2 2 13m

replicaset.apps/gitlab-nginx-ingress-default-backend-658cc89589 1 1 1 13m

replicaset.apps/gitlab-registry-86d9c8f9cd 2 2 2 13m

replicaset.apps/gitlab-sidekiq-all-in-1-v1-5c5dcd6dbb 1 1 1 13m

replicaset.apps/gitlab-task-runner-5dff588987 1 1 1 13m

replicaset.apps/gitlab-webservice-default-79dc48bcd4 2 2 2 13m

NAME READY AGE

statefulset.apps/gitlab-gitaly 1/1 13m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/gitlab-gitlab-shell Deployment/gitlab-gitlab-shell <unknown>/100m 2 10 2 13m

horizontalpodautoscaler.autoscaling/gitlab-registry Deployment/gitlab-registry <unknown>/75% 2 10 2 13m

horizontalpodautoscaler.autoscaling/gitlab-sidekiq-all-in-1-v1 Deployment/gitlab-sidekiq-all-in-1-v1 <unknown>/350m 1 10 1 13m

horizontalpodautoscaler.autoscaling/gitlab-webservice-default Deployment/gitlab-webservice-default <unknown>/1 2 10 2 13m

NAME COMPLETIONS DURATION AGE

job.batch/gitlab-issuer-1 1/1 10s 13m

job.batch/gitlab-migrations-1 1/1 3m1s 13m

Connect to the Gitlab Web application with the root user and the initial root password:

$ kubectl get secret gitlab-gitlab-initial-root-password \

-o go-template='{{.data.password}}' | base64 -d && echo

That’s it!

The source code is available on Gitlab.

Conclusion

We discovered in this article how to configure and deploy a production ready Gitlab on Amazon EKS.

Hope you enjoyed reading this blog post.

If you have any questions or feedback, please feel free to leave a comment.

Thanks for reading!

Documentation

[1] https://docs.gitlab.com/charts/advanced/external-object-storage/#docker-registry-images

[2] https://docs.gitlab.com/charts/advanced/external-object-storage/#s3-encryption

[3]

https://docs.aws.amazon.com/kms/latest/developerguide/services-rds.html

[4] https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSEncryption.html

[5] https://docs.gitlab.com/charts/installation/cloud/eks.html#persistent-volume-management

Leave a Reply